Machine learning tends to require quite considerable hardware due to the large number of calculations required. Whilst it is easier than ever to rent your hardware from the major service providers like Amazon and google and from specialist Machine Learning cloud providers like Databricks, there is a certain satisfaction in building your own ML workstation. and if you are serious about building ML models you may actually find that this works out cheaper too.

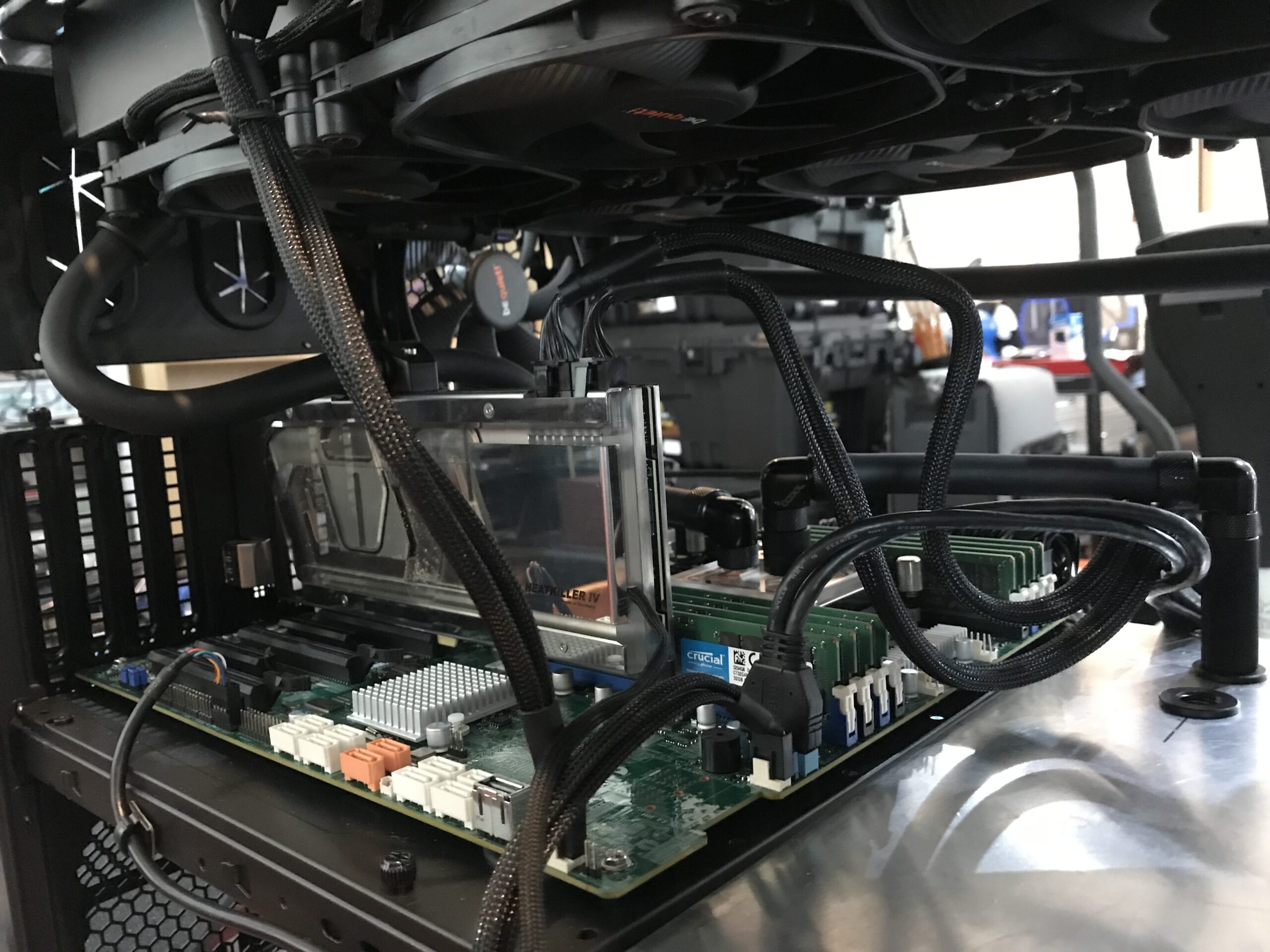

The first thing to consider is the CPU you may want to use. Unlike Gaming, where faster but fewer cores are king, ML likes lots of cores and doesn’t worry too much about speed. You will also need a lot of memory. and I mean a lot. Being able to work on an entire dataset in memory will make a huge amount of difference to you. So when I talk about lots of memory I mean 128GB at a minimum and the more you can get the better. My current workstation is based around a dual socket Xeon and has 1/2TB of Ram. Yes, that is right 512GB of memory! Each Xeon has 18 cores each of two threads for a total of 72 threads. It may be better in some cases to switch off those dual threads and simple run the Xeons as 36 cores each of one thread as hyper threading is a pipelining technology which keeps the core busy whilst it waits for memory or other things. As a result it doesn’t really give you double the performance, more like an additional 10 – 20%.

By now you are probably thinking that this all sounds pretty unaffordable but the reality is that you can build most of your systems from old disused servers from one of the popular market places. I ended up getting two servers with identical processors so then invested in a brand new motherboard which would take both processors and all of the combined memory. I was lucky as I also picked up several SSDs with the servers. Normally those are scrapped for fear of data loss, but some sellers will professionally wipe the servers and break-up RAID arrays. As a result the base system cost me little more than the cost of a new high end motherboard.

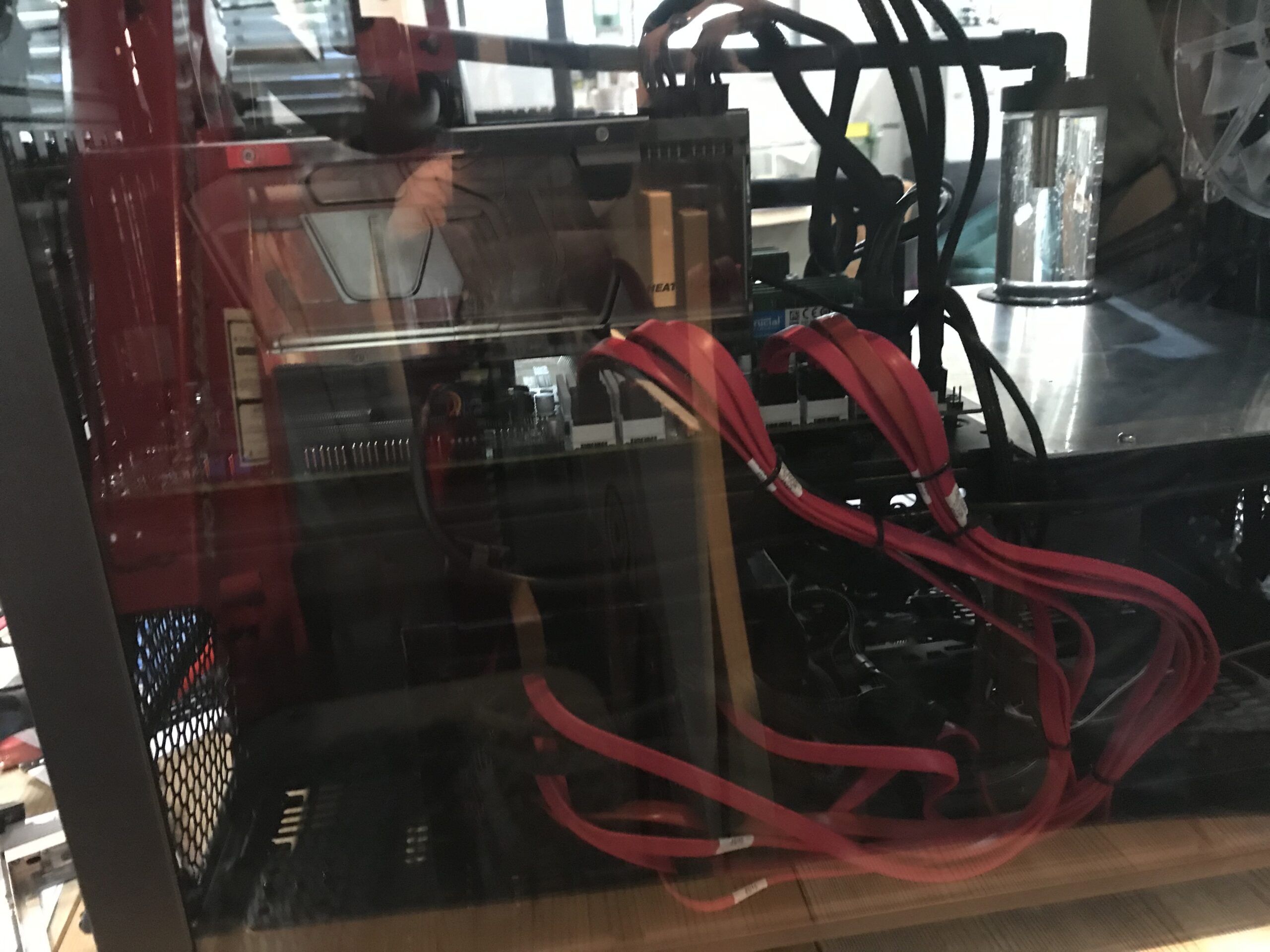

I selected a pretty big case for my workstation as I planned to add several GPUs and wanted the motherboard to sit horizontally to avoid GPU sag. I also wanted to add water cooling as the GPUs tend to generate a great deal of heat and often come in 2.5 card slots so there is little room between them for fan intakes and exhausts. This makes the cooling problem even worse and because this is a workstation and will sit next to my desk I wanted to also reduce noice and the fans are the main cause of that.

The case I selected was full of plate glass which I’m sure is loverly but no good for cooling solutions so half of it got ripped out and replaced with perforated aluminium sheet. I doubled my costs for a top of the range custom cooling solution and also custom made some parts like the mounting for the water pump and reservoir and a custom bulkhead which helps to mount the water reservoir. I finished off the custom work with a proprietary 2.5” disk mounting solution which allowed me to mount 12 SSDs in the space normally taken-up by three 5 1/4 drives.

I mentioned GPUs earlier. Both conventional Machine learning and Deep Learning benefit from being run on GPUs. Initially I fitted a single Titan RTX card and am about to fit a second card. Even second-hand these cards cost a fortune, and this was shortly before Nvidia released their 30 series cards. Some months later those cards are still incredibly scarce so the now two Titan RTXs will have to do. The secret with Deep Learning on graphics cards is they pretty much have to be Nvidia and you want as much memory on the cards as possible. Titan RTXs have 24GB of memory which allows you to run some pretty big models and/or batch sizes. There are deep learning libraries which will run on Intel or team Red (AMD) graphics cards but Nvidia is the simplest path for you to take.

The last thing to consider is power supply. This also needs to be big as you are going to be running most of this at maximum power draw for extended periods of time so I calculated that my rig with two GPUs installed was going to be coming in close to 1,200W at maximum load. Given that I want the option of upgrading or adding to those GPUs at some stage I went with a 1,600W power supply. Go for a high efficiency one of these as you have to pay for that electricity an low efficiency tends to mean more heat produced.

For an operating system you are going to want Linux which at least is free! Yes, you can use Windows but it is a less suitable platform for ML. If you must have Windows I suggest you dual-boot your platform.

All-in-all you should be able to build yourself a workstation for around £3,200 (assuming one high end GPU) or the equivalent in dollars or Euros. Buying second hand servers and parts in the US you are going to get better deals. Buying the same thing new, probably with less memory, is going to set you back four to six times as much. But again, if you are just starting out, consider renting rather than buying your hardware.

Edit – 16th Jan 2021

The Supermicro motherboard I chose proved challenging at times – not least just getting it to past POST (Power On Self Test) with two GPUs installed. Problem solved by limiting the number of PCIe slots to pass the BIOS through to. I now have two TITAN RTX cards installed and will shortly be putting it through its paces training a new model.

Edit – 23rd Jan 2021

I fitted an Nvidia Quadro RTX 6000 NVLink bridge – Two slot – between the two Titan cards. This, as mentioned, isn’t strictly necessary but does give me some options for some very memory hungry models. The NVLink bridges specific to Titan cards only comes in three or four slot options because of the width and cooling needs of the cards. Because one of my cards is water cooled I can get the air breather much closer to it which is why I needed a 2 slot variant. Yes, I will convert the second card to water breathing – when I have a chance, but probably will put that off until I need to drain the system anyway or if I acquire a third card.

+-----------------------------------------------------------------------------+ | NVIDIA-SMI 450.80.02 Driver Version: 450.80.02 CUDA Version: 11.0 | |-------------------------------+----------------------+----------------------+ | GPU Name Persistence-M| Bus-Id Disp.A | Volatile Uncorr. ECC | | Fan Temp Perf Pwr:Usage/Cap| Memory-Usage | GPU-Util Compute M. | | | | MIG M. | |===============================+======================+======================| | 0 TITAN RTX On | 00000000:02:00.0 On | N/A | | 41% 30C P8 28W / 280W | 498MiB / 24220MiB | 1% Default | | | | N/A | +-------------------------------+----------------------+----------------------+ | 1 TITAN RTX On | 00000000:81:00.0 Off | N/A | | 0% 31C P8 22W / 280W | 11MiB / 24220MiB | 0% Default | | | | N/A | +-------------------------------+----------------------+----------------------+ +-----------------------------------------------------------------------------+ | Processes: | | GPU GI CI PID Type Process name GPU Memory | | ID ID Usage | |=============================================================================| | 0 N/A N/A 3987 G /usr/lib/xorg/Xorg 102MiB | | 0 N/A N/A 4769 G /usr/lib/xorg/Xorg 211MiB | | 0 N/A N/A 4910 G /usr/bin/gnome-shell 171MiB | | 1 N/A N/A 3987 G /usr/lib/xorg/Xorg 4MiB | | 1 N/A N/A 4769 G /usr/lib/xorg/Xorg 4MiB | +-----------------------------------------------------------------------------+ nvidia-smi nvlink -c GPU 0: TITAN RTX (UUID: GPU-d2cd41cb-49bb-e58e-800f-2415ef8d9b7c) Link 0, P2P is supported: true Link 0, Access to system memory supported: true Link 0, P2P atomics supported: true Link 0, System memory atomics supported: true Link 0, SLI is supported: true Link 0, Link is supported: false Link 1, P2P is supported: true Link 1, Access to system memory supported: true Link 1, P2P atomics supported: true Link 1, System memory atomics supported: true Link 1, SLI is supported: true Link 1, Link is supported: false GPU 1: TITAN RTX (UUID: GPU-36a29228-ccce-4ac0-f158-dcbc6e721576) Link 0, P2P is supported: true Link 0, Access to system memory supported: true Link 0, P2P atomics supported: true Link 0, System memory atomics supported: true Link 0, SLI is supported: true Link 0, Link is supported: false Link 1, P2P is supported: true Link 1, Access to system memory supported: true Link 1, P2P atomics supported: true Link 1, System memory atomics supported: true Link 1, SLI is supported: true Link 1, Link is supported: false nvidia-smi nvlink -s GPU 0: TITAN RTX (UUID: GPU-d2cd41cb-49bb-e58e-800f-2415ef8d9b7c) Link 0: 25.781 GB/s Link 1: 25.781 GB/s GPU 1: TITAN RTX (UUID: GPU-36a29228-ccce-4ac0-f158-dcbc6e721576) Link 0: 25.781 GB/s Link 1: 25.781 GB/s

EDIT – 13th Feb 2021

Having run some benchmarks I’m now very happy. The performance is fairly close to a system running two professional quality cards (RTX Quadro 6000s) with NVLink. Those cards cost over £4K each and given that I managed to pick up the Titan cards for £1.2K each second hand I have very capable system indeed. Now on to my main project: https://infosecml.com/index.php/2020/12/27/improving-security-log-parsing-with-neural-networks/